How Kubernetes Supports Distributed Workloads in Modern Grid Systems

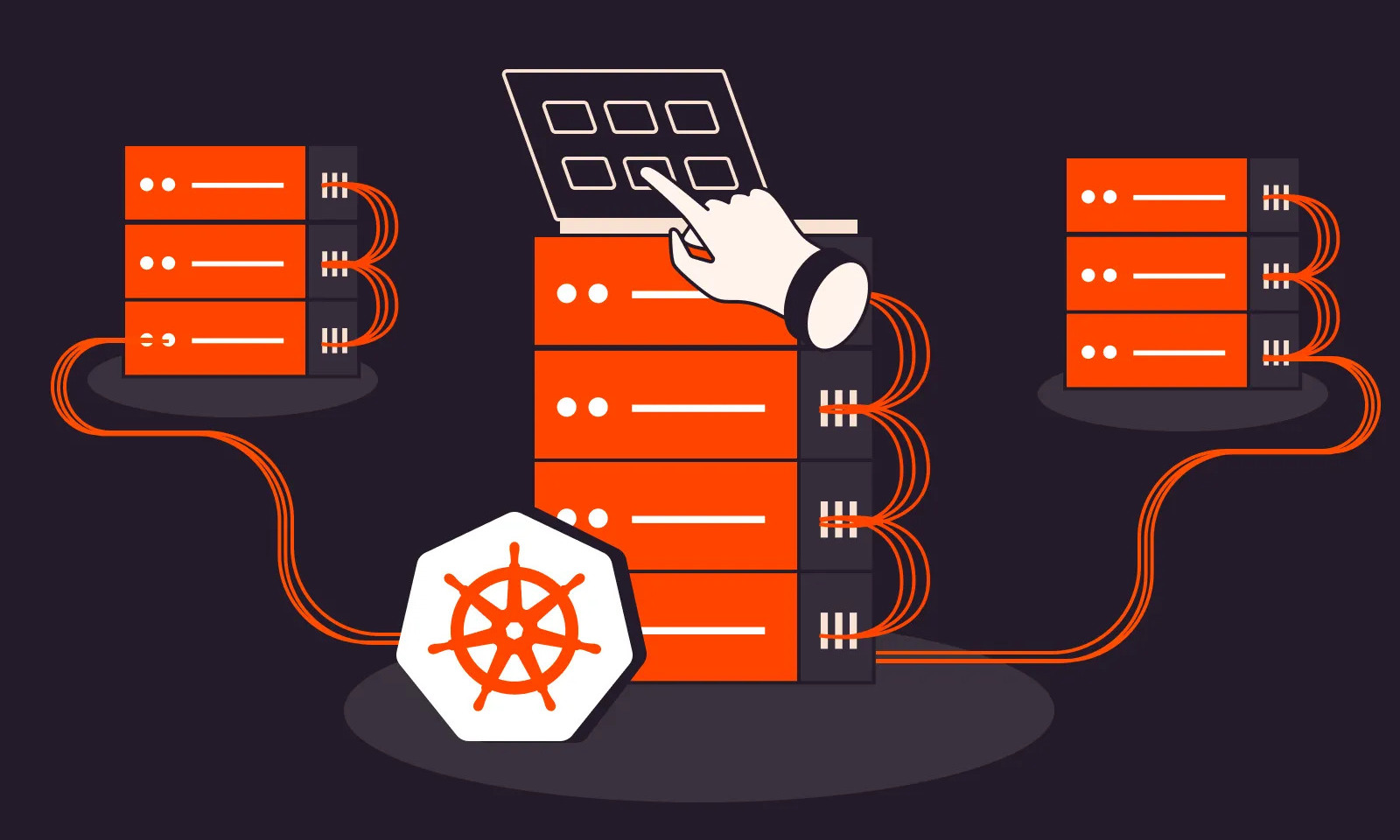

With the scale of data and computational tasks required in grid computing, proper management technologies are essential. One of the most reliable solutions today is Kubernetes. While it’s often known for deploying web applications, Kubernetes is also a powerful tool for automation and resource management across large distributed systems. For teams handling grid workloads—such as simulations, scientific computations, or financial modeling—Kubernetes opens up new possibilities for making workflows more efficient. It simplifies scheduling, scaling, and load balancing for tasks that are distributed but need to run simultaneously.

Because of its orchestration capabilities, more IT teams are adopting Kubernetes for grid workflows. It allows them to manage vast numbers of computational nodes that previously needed manual configuration. This automation means teams can spend less time on setup and more time focusing on results.

What Grid Computing Is and Why It Matters

Grid computing is a system where large tasks are divided into smaller parts and distributed across multiple machines or nodes. Think of it like a puzzle, where each piece is worked on at the same time, leading to faster overall completion. It’s widely used in weather forecasting, scientific research, and business operations like fraud detection and risk analysis.

However, managing such a distributed environment is not easy. Tasks must be monitored, allocated efficiently, and run reliably. This is where Kubernetes proves valuable, offering automated tools to help manage these resources in a grid setup. By integrating Kubernetes, organizations gain a more organized, responsive, and resilient computing environment.

How Kubernetes Enhances Grid Architecture

One of Kubernetes’ strongest advantages is its ability to manage resources dynamically based on current needs. In grid computing, where numerous tasks run concurrently, ensuring no memory or processing power is wasted is crucial. Kubernetes’ internal scheduler assigns pods—computing units—to the nodes best suited for them, all without manual input.

This leads to cleaner, more efficient grid workflows. Even when a node encounters issues, Kubernetes can redeploy pods automatically, maintaining system uptime. As a result, organizations get a more robust system capable of adapting to fluctuating demands.

Dynamic Scaling of Tasks and Resources

Workloads in grid systems can vary widely. Kubernetes can automatically scale the number of pods up or down based on real-time demand. If there’s a sudden increase in data processing needs, Kubernetes spins up new pods to handle the load. If the demand drops, the system reduces active pods to conserve energy and cut costs.

This elastic scaling is ideal for grid environments with unpredictable workloads. It ensures that performance remains stable without over-provisioning resources.

Ensuring Stability and Fault Tolerance

In grid computing, a single node failure can disrupt an entire operation. Kubernetes addresses this with built-in fault tolerance. If a pod crashes or fails to function, Kubernetes redeploys it to another available node. It also identifies recurring issues and can prevent repeated failures.

This fault-tolerant design adds reliability to the grid system, allowing continuous operation even when unexpected issues occur. For critical tasks in industries like science and finance, this reliability is essential.

Security Considerations in Grid Workflows

Security is a top priority in distributed systems. Kubernetes supports role-based access control, meaning only authorized users can access specific parts of the system. It also offers built-in encryption for communications between nodes and authentication mechanisms to prevent unauthorized access.

With these features, sensitive data in grid computing—such as medical or financial information—can be protected. Audit logs also provide transparency and traceability, helping teams track activity across the system.

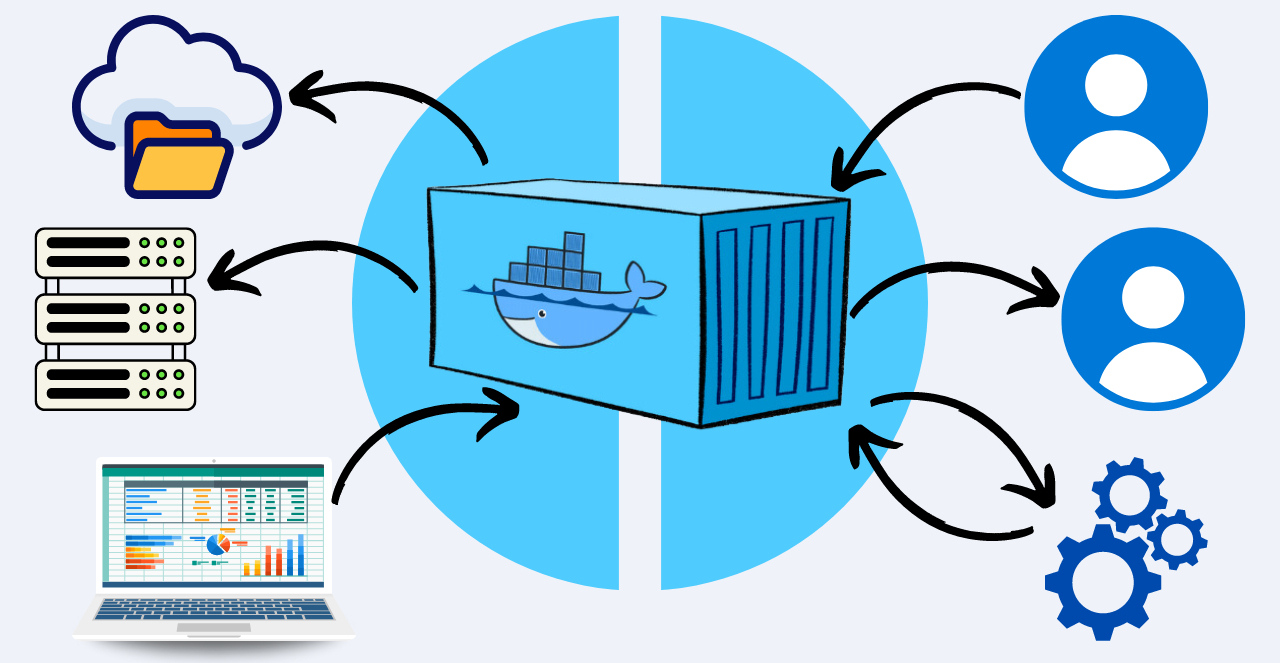

Using Containers for Modular Grid Tasks

Kubernetes is known for its robust container management. Containers allow tasks to be packaged with all their dependencies, making them easy to deploy across different nodes. In grid computing, this modularity ensures that once a task is containerized, it can be reused across environments without reconfiguration.

This leads to fewer errors, faster setup, and easier debugging. A container-based approach brings consistency and efficiency to grid workflows.

System-Wide Observability and Monitoring

Real-time monitoring is critical in grid environments. Kubernetes integrates with tools like Prometheus and Grafana for full-system visibility. These tools display the health and status of nodes and tasks in one dashboard, allowing teams to detect bottlenecks or failures quickly.

Alerts can be configured to notify admins of performance issues before they become critical. With reliable observability, teams can make informed decisions and proactively maintain system health.

Integration with Other Tools for Broader Workflows

Kubernetes also supports integration with other popular tools used in grid computing, such as Apache Spark, Hadoop, and batch processing frameworks. This means existing workflows don’t need to be replaced—Kubernetes simply enhances them with better automation and scalability.

By acting as the orchestration backbone, Kubernetes allows teams to focus on task execution rather than infrastructure setup.

Smarter Deployment of Grid Projects

Deploying grid projects can be complex, especially when updates are frequent and systems are distributed. Kubernetes introduces a smarter approach by enabling automation through CI/CD pipelines, allowing teams to test updates in staging environments before pushing them to production. This process minimizes human error, ensures smoother transitions, and makes version control more manageable.

The benefit of CI/CD integration goes beyond convenience. It enables continuous improvements without interrupting live operations. Teams can implement rolling updates, rollback options, and automated testing routines, all of which support consistent system health and faster innovation within grid infrastructures.

In short, Kubernetes doesn’t just simplify deployment—it enhances performance and reduces operational risks. With better testing, monitoring, and rollback capabilities, grid computing workflows become more reliable, scalable, and resilient in the face of evolving demands.

Powering Large-Scale Task Management

Managing large-scale tasks across hundreds of nodes is a logistical challenge, especially in dynamic environments like grid computing. Kubernetes addresses this with a unified orchestration system that handles automated deployment, real-time scaling, and intelligent scheduling. By centralizing control, teams gain a clearer understanding of task status and resource utilization.

This clarity translates into better planning and execution. Instead of manually distributing jobs and tracking progress across fragmented platforms, Kubernetes allows for a streamlined approach. Administrators can monitor workloads, redistribute tasks on the fly, and maintain overall system health using real-time dashboards and alerts.

For organizations tackling massive projects—whether climate simulations, genomic research, or enterprise analytics—Kubernetes provides a dependable foundation. It transforms scattered operations into a coordinated, efficient system that can adapt to growth, mitigate failures, and deliver consistent outcomes.