The Foundation of Scalability: Why Distributed Systems Matter

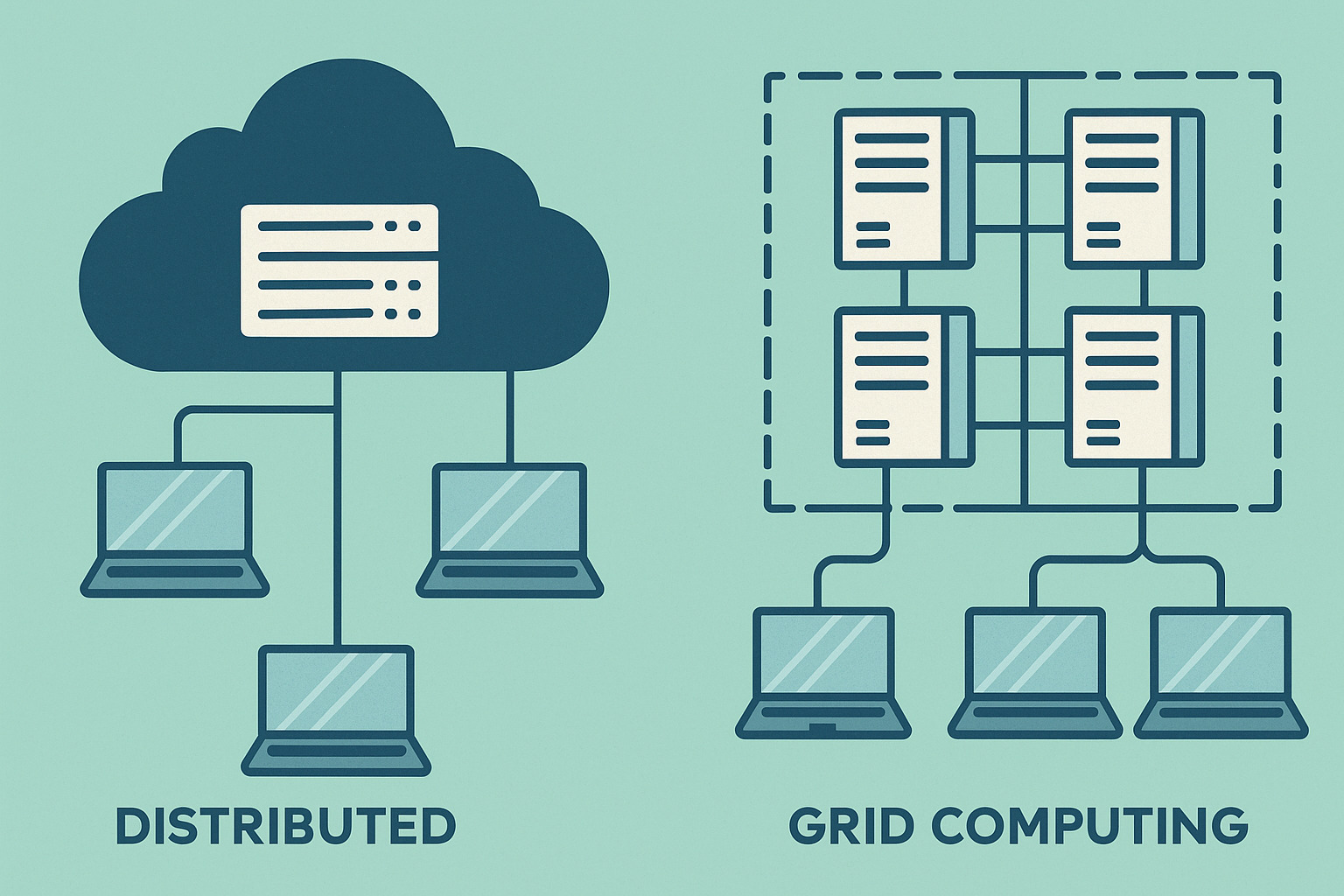

Rapid user growth is a good problem to have—but if your system can’t keep up, delays, errors, or downtime can follow. In these situations, distributed systems are the typical solution. The idea is simple: divide tasks and spread them across multiple machines. This way, no single server carries the entire load.

For example, imagine a website used by millions daily. If a single server handles all requests, it will crash quickly. But with a network of interconnected servers, each request is processed more easily. Every component plays a role, working together to maintain performance.

Building distributed systems is not just about hardware—it also requires solid design. That means the right structure, clear communication, and balanced workloads. These are the keys to achieving real scalability.

Planning Architecture Before Coding

Before setting up a distributed system, you need a clear plan. It’s not enough to deploy servers quickly; you must know how each component will communicate. The system must be designed to handle increasing workloads without slowing down.

The architectural plan should be aligned with your application’s goals. A system meant for millions of users will need a different layout from one built for thousands. Questions like “What is the core function?”, “What do users do most?”, and “Where are the bottlenecks?” should be answered before writing code.

Think of it as a building blueprint. Before construction begins, you need to know how many floors, how many doors, and what materials to use. In systems engineering, proper planning is the first step toward scalability.

Load Balancing: Even Distribution, Less Waiting

One of the most critical parts of distributed systems is load balancing. Instead of one server handling all requests, the traffic is split across different nodes—like multiple open checkout lanes at a grocery store.

A load balancer directs each incoming request to the server with the lightest load, avoiding traffic jams at a single server. For instance, if you have 10 servers that can each handle 100 users, they can collectively support 1,000 users without lag.

Effective load balancing goes beyond technology. It also requires observation—finding out where the system slows down. Sometimes, a single function causes a bottleneck. That’s why monitoring tools and metrics are essential in managing traffic.

Horizontal Scaling: More Machines, Not Bigger Ones

When a server is maxed out, you can scale in two ways: vertically or horizontally. Vertical scaling means upgrading a machine’s power—more RAM or faster CPUs—but this has limits. That’s why horizontal scaling is often preferred.

With horizontal scaling, you increase the number of machines. From 3 servers, you can scale to 6 or 10 as needed. This is the foundation of cloud computing—adding instances when required.

Horizontal scaling is more budget-friendly long-term. It’s easier to replace a basic server than to upgrade a high-end machine. If one machine fails, others take over, ensuring the system keeps running.

Microservices: Breaking Down the Monolith

Microservices are a huge help in building distributed systems. Instead of creating one massive application, it’s split into small, independent services—each with its own function and deployment. These are easier to scale, debug, and update.

In an e-commerce platform, for instance, user login, shopping cart, payments, and order tracking can be separate services. If cart usage spikes, only that service is scaled—leaving others unaffected.

This approach allows flexibility in development. Different teams can build different services, without needing to deploy all at once. Feature updates are faster, and if one service fails, the rest continue to work.

Caching Systems: Less Access, Faster Response

Caching is one of the most effective ways to speed up system responses. Instead of querying the database every time, frequently accessed data is stored in a cache—a fast-access memory area. Think of it as the system’s short-term memory.

With caching, the load on the main database is reduced. For example, if thousands of users access the same product page hourly, the server doesn’t fetch the data repeatedly. Instead, it serves the cached result instantly.

Popular tools like Redis and Memcached boost system performance. However, correct configuration is key to avoiding outdated data. Usually, a time-to-live (TTL) is set so cache contents refresh periodically.

Messaging Queues: Organizing Backend Traffic

When many processes are running, not everything must be done immediately. Messaging queues help line up tasks in order—like a bank queue, where transactions are processed one by one.

They’re used for background jobs. For example, on a website, if a user uploads a large file, they don’t have to wait for it to finish. The task is queued and processed in the background while the page remains active.

Queues like RabbitMQ and Kafka help systems flow more smoothly. They make processing more manageable and allow easier identification of bottlenecks. In complex applications, messaging systems act like traffic controllers.

Monitoring and Alerts: Watching Over Performance

No system is perfect, so monitoring is essential for knowing when intervention is needed. With metrics and logs, you can tell if a part of the system is slowing down, failing, or encountering errors.

Tools like Prometheus and Grafana provide real-time performance visibility. They offer graphs, charts, and alerts when metrics exceed thresholds. This helps catch issues before they affect users.

Monitoring also supports long-term planning. If a service consistently maxes out at 8PM, you can add servers at that time. Monitoring data guides strategic scaling.

Fault Tolerance: Failing Gracefully

Distributed systems must always be ready for failure. Machine breakdowns, network errors, or memory exhaustion are inevitable—but with proper design, the whole system doesn’t go down. This is called fault tolerance.

One example is database replication. If the primary database crashes, a standby replica takes over. Load balancers and servers should also have fallbacks. If one fails, another steps in seamlessly.

Fault tolerance is like a seatbelt—you don’t need it often, but when you do, it saves everything. Redundancy and failover strategies must be included from the planning stage.

Long-Term Benefits of Scalability

Distributed systems aren’t just technical upgrades—they’re long-term investments for growth. With a solid foundation, expansion is easier. You won’t need to rebuild every time user demand increases.

Scalability brings peace of mind to business owners, developers, and users. They’re not afraid of rising traffic, knowing the system can handle it. Plus, efficient components reduce maintenance costs.

Ultimately, the goal is a system that grows with demand. By combining distributed architecture, monitoring, caching, and smart task division, you can build a setup that’s fast, reliable, and ready for the future.