Why Grid Systems Rely on MPI for Communication

Grid computing connects separate computers to solve complex problems together. Each machine might be in a different location, but they all need to work in sync. For that to happen, they have to talk to each other clearly and quickly. That’s where the Message Passing Interface, or MPI, plays a central role.

MPI is not a programming language but a set of rules. It helps programs running on different computers share data and stay coordinated. Without it, tasks would be slower or misaligned, especially in large-scale systems where each step depends on data from somewhere else. This is where parallel task management becomes crucial, enabling multiple processes to execute simultaneously while maintaining synchronization and efficiency.

Think of a team working on a puzzle in different rooms. MPI is the way they pass pieces back and forth—sharing updates, making sure nothing overlaps, and moving forward together. It keeps the project running as one, even though it’s spread across many hands.

Coordinating Tasks Across Distributed Nodes

Each computer in a grid is called a node. These nodes handle different parts of the larger task. MPI organizes which node does what and when to send results. It’s the system’s way of managing who speaks, who listens, and what gets shared.

When a job starts, MPI assigns pieces of work to various nodes. As those nodes complete their pieces, MPI handles the exchange of information. This process allows a program to behave as if it’s running on a single machine, even though it’s spread across many.

The key is that all nodes follow a shared script. If one sends data before another is ready to receive it, things fall apart. MPI ensures timing is right, data is formatted properly, and tasks remain on schedule, even when thousands of machines are involved.

Synchronous and Asynchronous Communication

In MPI, messages can be sent in two ways. Synchronous communication means one node waits for the other to receive its message before moving on. It’s a safe method that reduces the chance of missing or overlapping messages.

Asynchronous communication, on the other hand, lets the sending node move ahead while the receiving node handles the message later. This approach can speed things up but requires careful planning so no messages are lost or delayed too long.

Writers of MPI-based programs decide which type to use depending on the task. A weather simulation, for example, may rely on synchronous messages to ensure all data is accurate. A data sorting job might favor asynchronous exchanges to run more quickly.

How Data Is Packaged and Sent

Messages in MPI aren’t random. Each one is packaged with a clear label, size, and destination. This helps the receiving node know what to expect and how to handle it. These messages are like labeled envelopes—easy to sort and route.

The structure of the data matters too. MPI can handle simple values like numbers or more complex types like arrays or custom objects. But before sending, that data must be formatted so all nodes interpret it the same way, no matter what system they’re on.

Data types, buffers, and tags make up the anatomy of an MPI message. These parts keep communication organized. They ensure each message ends up in the right place and gets processed correctly, whether it’s part of a math problem or a file transfer.

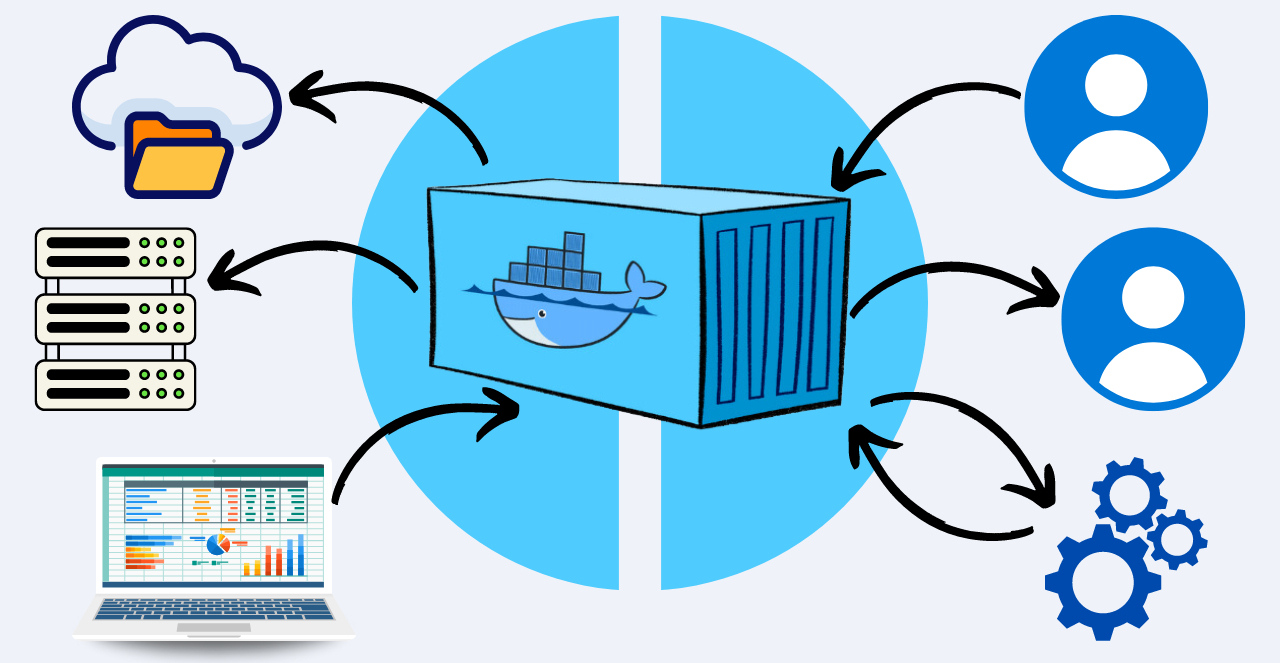

Broadcasting and Collective Communication

Sometimes, a message needs to go to more than one node. MPI includes broadcast operations that send a single message to many nodes at once. This is useful when all nodes need the same piece of information to do their part.

There are also collective communication methods. These include gathering data from multiple nodes into one place or splitting data from one node across the rest. These tools keep all parts of the grid aligned and reduce repeated work.

Using collective functions wisely can make large jobs more efficient. Instead of sending dozens of individual messages, one broadcast handles the job. That saves time and reduces the risk of communication errors across the system.

Error Handling and Communication Safety

In a large grid, things don’t always go as planned. A node might go offline. A message might not arrive. MPI includes tools to check for these problems and handle them without breaking the whole system.

Error codes and status reports help programmers catch issues early. They can set up fallback actions, skip damaged nodes, or restart tasks as needed. This adds reliability to grid computing, where consistency matters most.

Some MPI setups also include timeouts. If a message takes too long to arrive, the system flags the delay. These features help grids recover from minor failures and keep working, even when parts of the system face challenges.

Scaling Across Thousands of Machines

One of MPI’s strengths is its ability to grow. Whether a task uses ten nodes or ten thousand, the interface stays consistent. This scalability makes it ideal for scientific research, finance modeling, or any job that needs a lot of power spread across space.

As projects grow, MPI helps manage the increase in communication. Instead of flooding the system with random messages, it routes them carefully, balancing the load. This avoids bottlenecks and keeps the system running smoothly at scale.

It’s not just about size—it’s about performance. MPI makes sure that adding more machines actually helps. With the right planning, doubling the number of nodes can nearly double the speed of processing, thanks to efficient message passing.

Real-World Examples in Action

MPI is used in many fields. Weather centers use it to process forecasts quickly. Medical researchers rely on it to run simulations of cell behavior. In both cases, huge volumes of data must be exchanged across multiple machines to reach results on time.

One common use is in climate modeling. These programs divide the Earth into regions, and each node works on one piece. MPI passes information between the regions, allowing them to affect one another—just like real weather systems do.

Another example is drug discovery. MPI helps analyze how molecules interact by running parallel simulations. These results are then shared and combined, revealing patterns or possibilities that would take too long with one computer alone.

Writing MPI Programs with Clarity

Programming with MPI takes planning. Writers need to think about which data needs to move, when it should be shared, and how to handle delays or errors. Clear logic and clean code make a big difference in how the system performs.

Comments and diagrams often help during the development phase. Mapping out communication before writing code saves time and prevents problems. Tools that test message flow can also help identify weak spots in the design.

Once the system is running, results can be measured and adjusted. If one node is doing too much work, tasks can be reassigned. If messages are delayed, code can be optimized. Good MPI programming is a mix of logic, testing, and real-world tuning.

Keeping Grid Communication Efficient and Reliable

At its core, MPI helps different computers work as one. It doesn’t just send messages—it makes sure they’re received, understood, and used in the right way. That coordination keeps grid computing fast, reliable, and useful for the biggest tasks.

From data-heavy research to massive simulations, MPI keeps systems talking clearly. It supports growth, handles errors, and helps manage the complexity of distributed work. For anyone working with grid systems, understanding MPI means building better solutions.

Whether you’re just getting started or fine-tuning a project, MPI remains the backbone of how grids stay in sync—quietly moving information behind the scenes, one message at a time.