General Differences Between the Two Types of Computing

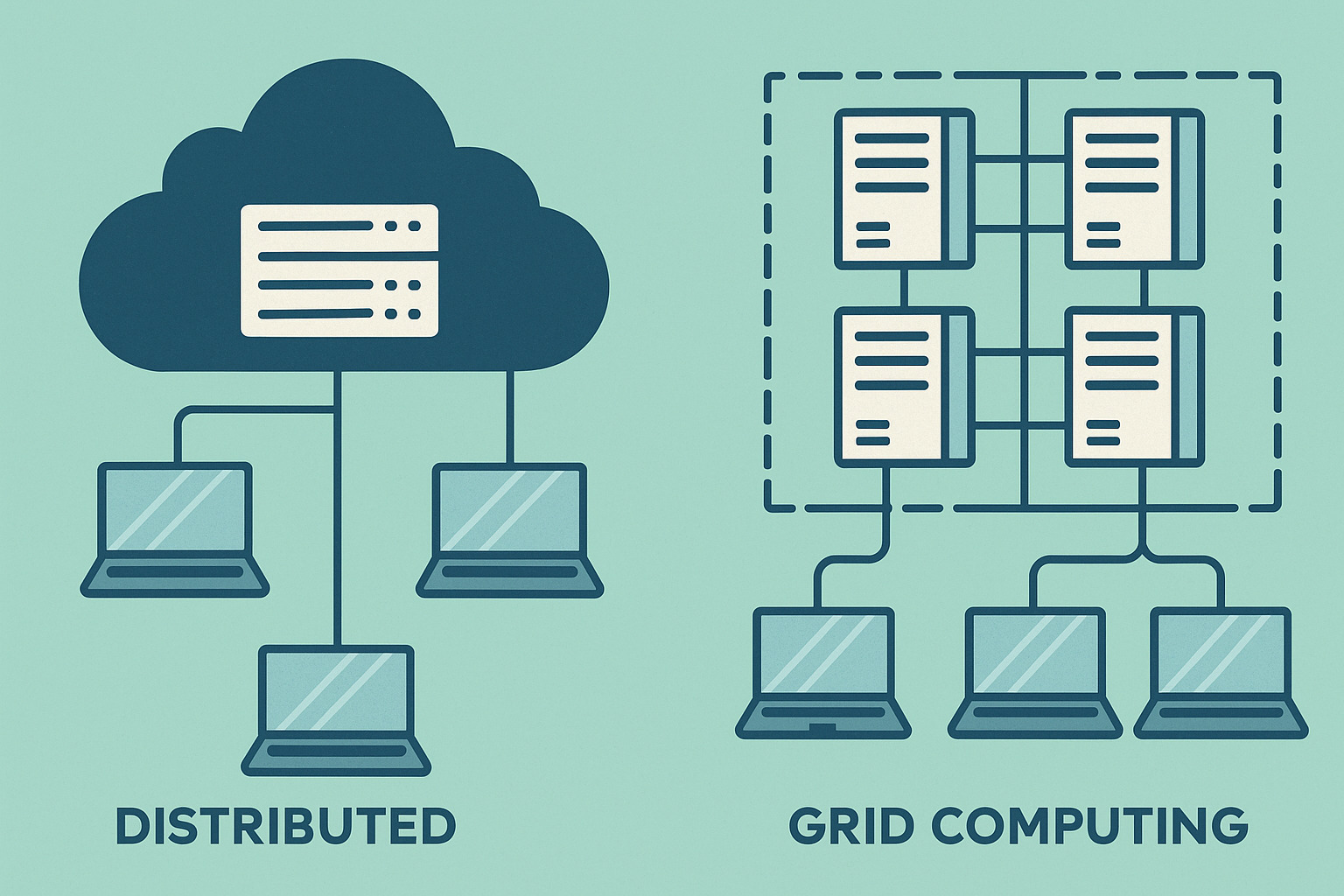

Distributed computing and grid computing are two approaches to information processing used for large-scale technological tasks. When a project demands intensive processing power, a single computer is often insufficient. This is where distributed and grid computing come in as solutions. Understanding the distinction between the two is crucial, especially for those working in data, research, and automation fields.

In distributed computing, multiple computers work together as one system. These machines may be located in the same place or scattered across various locations. Tasks are divided among them, and each machine is responsible for a portion of the work. This approach is commonly used in businesses or organizations that control all the computers involved in the process.

Grid computing, on the other hand, is slightly different. It involves combining many computers from various places and purposes to complete a single task. These devices may come from different institutions and are temporarily lent for the project. It is often used in scientific research, such as climate modeling or analyzing data from outer space.

Task Distribution Concept

A core principle of both systems is task sharing. When a task is too large for a single machine, it is broken into smaller parts that multiple computers can execute simultaneously. It’s like a group project where each member has an assigned part.

In distributed computing, the connection between machines is more controlled. For example, a company might use a distributed system for its website backend—with one server handling the database, another managing authentication, and another delivering content. Each part relies on the smooth communication among servers.

In grid computing, connections are more flexible. One computer might be in Asia, another in Europe, and another in the US, all working on the same task. They’re not always connected but collaborate when needed—much like volunteers who assist when required but don’t belong to a single organization.

Ownership and Resource Management

Another key difference lies in ownership of the resources. In distributed computing, a single entity typically owns all the involved devices—often a company or organization with full control over the system. This makes it easier to manage and update.

In grid computing, resources come from various sources. For example, multiple universities may contribute computing power to a grid project. These machines are not owned by one group but are used collaboratively for the project’s goal. Management is more challenging due to the need for coordination across institutions.

The centralized control in distributed computing provides better security, while grid computing relies on trust and collaboration to maintain project integrity.

Benefits for Large-Scale Data Processing

For data-heavy tasks like AI training or genomic analysis, both systems offer benefits. Each has its strengths and limitations. Distributed computing provides faster response times when servers are in the same location, offering low latency and easier task synchronization.

Grid computing, despite delays from physical distances, compensates with the massive power of the network. Imagine a project needing 10,000 cores to complete in a week—hard to do in one place but possible through institutional contributions.

Certain scientific projects wouldn’t be possible without grid setups. An example is the SETI@home project, where idle computers analyze signals from space—something traditional distributed systems alone couldn’t handle.

Usage in Daily Settings

Distributed computing is often seen in everyday business and services. Websites using cloud services likely employ a distributed setup with load balancing to maintain stability during high traffic—such as e-commerce platforms during holidays.

Grid computing isn’t typically used in such scenarios. Instead, it appears in high-level research institutions and major engineering, space, or bioinformatics projects. It’s not suitable for small business operations.

Some hybrid systems combine both concepts. A cloud platform may use a distributed system internally but connect to external grids when extra power is needed, depending on the project’s size and goal.

Technologies Behind Each System

To operate effectively, distributed computing requires robust network infrastructure. It often uses microservices architecture, load balancers, and container tools like Docker and Kubernetes. Consistent communication and failover support are essential to maintain service continuity during failures.

Grid computing setups are more complex. Tools like the Globus Toolkit assist with resource sharing across systems. Beyond tech, policy management ensures that participation is legal and secure.

Both systems require monitoring tools to assess performance and security. No single approach is always better—the right technology depends on the type of work and project scope.

Security and Operational Responsibility

Security is a major concern with interconnected systems. In distributed computing, single ownership allows for easier protection—through unified policies, firewall setups, and quick threat responses.

Grid computing faces additional challenges. Since machines aren’t controlled by one entity, agreements are needed to secure data. Some grid projects use sandboxing to isolate faults and prevent them from affecting the entire system.

Each participant is responsible for maintaining updated systems. One vulnerability could compromise the whole network—making trust and technical competence essential.

Speed and Scalability

For urgent tasks, distributed computing is often preferred due to quicker results in real-time applications. For instance, banks use distributed systems for real-time fraud detection.

If speed isn’t as critical but extreme computing power is needed, grid computing is more suitable. Projects like storm simulations or disease studies prioritize processing scale over speed.

Scalability also matters. Distributed systems can scale by adding servers if configured well. Grid systems handle volume flexibly, but adding participants is more complex.

When One Is Better Than the Other

For high uptime, strong security, and fast processing, distributed computing is ideal. Companies with online platforms—such as video streaming, e-commerce, or mobile apps—commonly use it to avoid service interruptions.

For collaborative computing across organizations, grid computing works best—such as in climate change studies or deep learning models requiring vast datasets.

Choosing between them isn’t just about preference. It depends on the objective, budget, and participant capabilities. Careful planning and technical consideration are key.

The Future of Parallel Computing

As technology advances, the demand for powerful computing systems grows. Distributed computing helps develop faster digital services, while grid computing enables collaborative research beyond any single institution’s capacity.

New models like edge computing and volunteer computing add layers to this concept. Regardless of form, both aim to deliver broader and more efficient data processing solutions.

Understanding their differences helps not only tech professionals but also entrepreneurs, managers, and researchers in selecting the right infrastructure for their needs.