Organizing Tasks Across a Distributed Grid Network

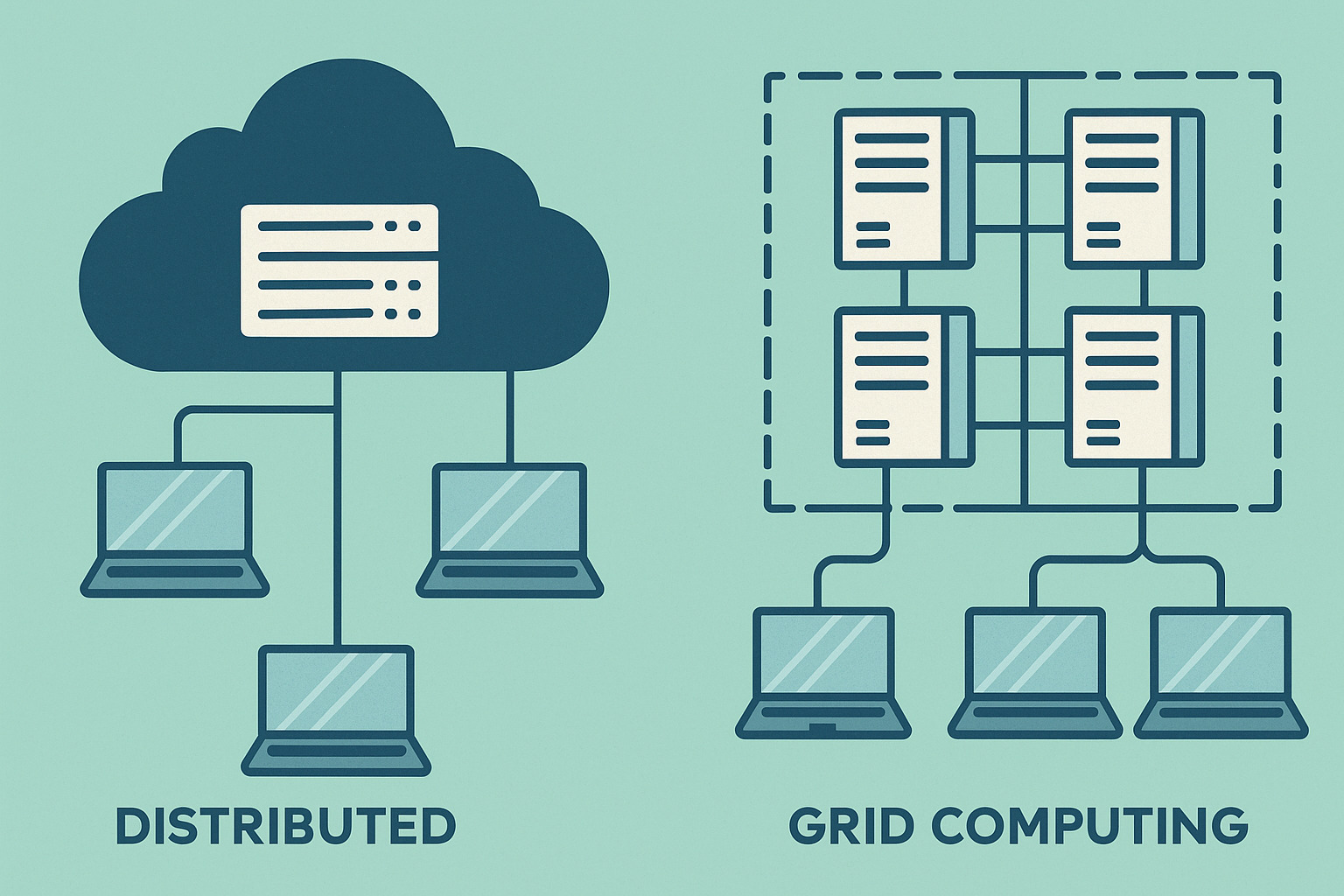

In grid computing, numerous computers work together to simultaneously process complex tasks. Unlike traditional systems that rely on a single CPU, grid computing utilizes multiple machines that may be located in different geographic areas. For such a distributed setup to be successful, efficient job scheduling is essential.

Job scheduling plays a critical role in a grid environment. This process determines which tasks should be prioritized, which computing resource should handle them, and when they should be executed. It functions like a manager organizing a queue in a busy terminal—ensuring that no task is overlooked or delayed unnecessarily.

When scheduling is managed effectively, task delays are minimized and the computing power available across the network is used to its fullest potential.

The Role of the Job Scheduler in the Computing Process

The job scheduler functions much like a traffic controller for the grid. It ensures that each task has a designated route and time slot for execution. For instance, if there are ten computers and twenty pending tasks, the scheduler decides how those tasks should be distributed among the nodes.

The scheduler considers several factors such as available memory, current CPU load, and task priority before assigning any job. It doesn’t simply forward tasks indiscriminately—it evaluates whether a specific node can handle a new task effectively.

If a problem arises on one machine, the scheduler has the ability to reassign the task to another available node, ensuring system-wide continuity and stability.

Static vs. Dynamic Scheduling in Grid Environments

There are two main types of job scheduling: static and dynamic. Static scheduling involves planning the entire execution process ahead of time. It is most effective for predictable workloads, such as batch simulations or scheduled computations.

On the other hand, dynamic scheduling adjusts in real-time based on the current state of the system. If a node crashes or new resources are added, the scheduler immediately recalibrates task assignments to adapt to the updated environment.

Dynamic scheduling offers greater flexibility, making it more suitable for large-scale grid networks where workloads frequently change.

Load Balancing and Distributing Workload Evenly Across Nodes

One of the central objectives of job scheduling in grid computing is achieving effective load balancing across all participating nodes. This ensures that no single machine is overburdened while others remain underutilized. Without proper distribution, system performance can degrade, leading to slower task execution, wasted resources, and bottlenecks in processing pipelines.

To achieve balance, schedulers assess the real-time status of each node, including available memory, processing capacity, bandwidth, and workload history. These metrics help determine which machines are best suited for incoming tasks. In more advanced systems, machine learning algorithms may even be used to predict future node availability, optimizing task distribution proactively.

An evenly balanced grid infrastructure leads to greater throughput, reduced latency, and better overall efficiency. By preventing resource idleness and minimizing overloads, the system maximizes its computational potential, offering both speed and cost-efficiency to large-scale operations.

Using Priorities to Manage the Job Queue

In grid computing, not every task holds the same weight or urgency. Some operations—such as real-time emergency response models, financial risk assessments, or medical data simulations—require immediate attention. Prioritizing such tasks is critical to ensure timely results, especially when resources are shared among various users and applications.

Schedulers implement a tiered priority system, organizing tasks in a queue where jobs with higher urgency are placed at the top. These systems may pause or delay lower-priority tasks when a high-priority process enters the queue. This scheduling approach mimics real-world triage practices, where resources are allocated based on urgency and criticality rather than arrival time alone.

By embedding priority levels into scheduling logic, grid computing systems can maintain responsiveness to time-sensitive workloads while still processing lower-priority tasks efficiently when capacity becomes available. This approach ensures optimal use of resources without compromising mission-critical operations.

Fault Tolerance and Recovering Tasks When Failures Occur

Fault tolerance is an essential feature in grid computing, where reliability cannot be compromised even when individual nodes fail unexpectedly. As distributed systems often span multiple locations and hardware types, the likelihood of intermittent failures is high. Without mechanisms to handle these failures, the integrity and continuity of tasks would be at risk.

To address this, schedulers are designed to continuously monitor task execution across nodes. If a node becomes unresponsive or fails, the system automatically reallocates the interrupted task to another functional node. In some cases, duplicate tasks or checkpoints are maintained so that work can be resumed without starting over entirely, minimizing data loss and processing delays.

This proactive error-handling capability ensures that operations continue seamlessly, even amid hardware malfunctions or connectivity issues. Fault-tolerant scheduling preserves productivity, maintains data integrity, and enhances the overall resilience of the grid infrastructure.

Resource Reservation for High-Demand Tasks

Certain computational tasks require multiple high-performance nodes working together over an extended period. These include applications such as climate modeling, seismic simulations, or complex 3D rendering—where real-time execution and uninterrupted processing are critical. In such cases, resource reservation becomes a vital scheduling strategy.

Schedulers can pre-allocate a defined set of nodes for a specific task, blocking them from being used by other jobs during the reserved window. This prevents mid-execution interruptions and ensures the reserved resources are fully available for the duration of the task. This concept mirrors booking a dedicated meeting space in a busy office to ensure exclusive use.

This reserved approach provides assurance for high-priority and high-demand applications. It safeguards task execution from resource contention, enabling precise planning and timely completion, particularly in industries where computational timing has direct operational or financial implications.

Monitoring Tools and Real-Time Job Status Feedback

Keeping track of job status is vital in grid computing. It is not enough to simply dispatch tasks and wait for results—ongoing feedback is essential for operational oversight.

Schedulers are often paired with monitoring tools that display how many tasks are active, queued, or completed. If delays occur, these tools help pinpoint the cause—whether it’s a memory shortage, CPU overload, or connection issue.

Real-time monitoring enables fast adjustments, preventing minor disruptions from escalating into prolonged workflow delays.

Differences Between Scheduling in Cluster and Grid Systems

Although the principles are similar, job scheduling in grid computing differs from that in cluster computing. In cluster environments, machines are usually located in the same place, with identical configurations and shared file systems.

In contrast, grid computing nodes are geographically distributed—sometimes across countries. Scheduling must account for issues like latency, time zone differences, and system compatibility, making the process more complex.

As a result, grid schedulers must be more advanced and flexible, capable of handling unpredictable variables that don’t typically exist in centralized clusters.

Industry-Wide Use of Job Scheduling in Grid Computing

Job scheduling is fundamental to many industries that rely on grid computing. In scientific research, it supports genome analysis and physics simulations. In finance, it enables fraud detection and risk modeling. While in media, it accelerates video rendering and encoding.

Each of these fields benefits from timely results and reduced operational costs through effective scheduling. Schedulers are tailored per industry—whether focusing on strict deadlines, cost control, or computational constraints.

Thus, job scheduling is more than a technical function; it is a key component in managing operational workflows across a wide array of sectors.